You are viewing documentation for Kubernetes version: v1.27

Kubernetes v1.27 documentation is no longer actively maintained. The version you are currently viewing is a static snapshot. For up-to-date information, see the latest version.

Automated High Availability in kubeadm v1.15: Batteries Included But Swappable

Authors:

- Lucas Käldström, @luxas, SIG Cluster Lifecycle co-chair & kubeadm subproject owner, Weaveworks

- Fabrizio Pandini, @fabriziopandini, kubeadm subproject owner, Independent

kubeadm is a tool that enables Kubernetes administrators to quickly and easily bootstrap minimum viable clusters that are fully compliant with Certified Kubernetes guidelines. It’s been under active development by SIG Cluster Lifecycle since 2016 and graduated it from beta to generally available (GA) at the end of 2018.

After this important milestone, the kubeadm team is now focused on the stability of the core feature set and working on maturing existing features.

With this post, we are introducing the improvements made in the v1.15 release of kubeadm.

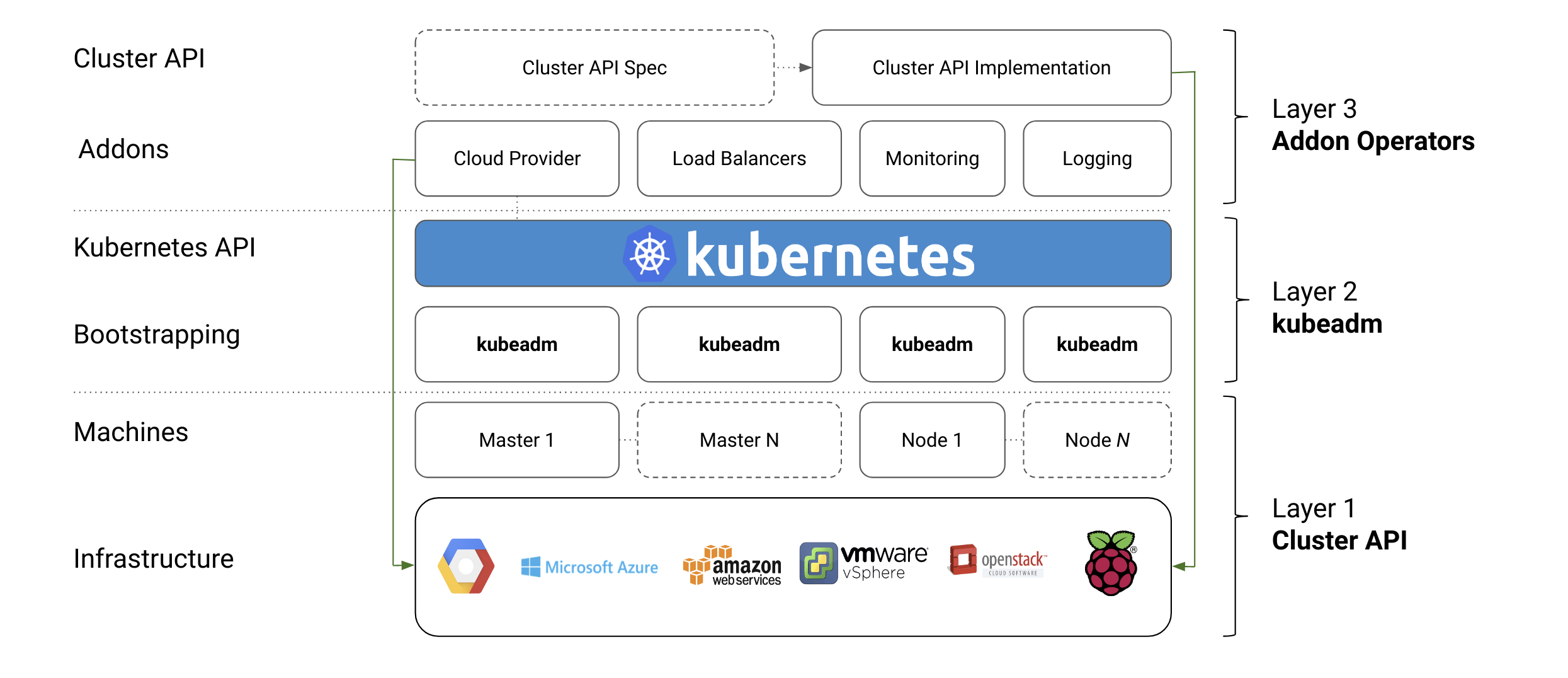

The scope of kubeadm

kubeadm is focused on performing the actions necessary to get a minimum viable, secure cluster up and running in a user-friendly way. kubeadm's scope is limited to the local machine’s filesystem and the Kubernetes API, and it is intended to be a composable building block for higher-level tools.

The core of the kubeadm interface is quite simple: new control plane nodes are created by you running

kubeadm init, worker nodes are joined to the control plane by you running

kubeadm join. Also included are common utilities for managing already bootstrapped

clusters, such as control plane upgrades, token and certificate renewal.

To keep kubeadm lean, focused, and vendor/infrastructure agnostic, the following tasks are out of scope:

- Infrastructure provisioning

- Third-party networking

- Non-critical add-ons, e.g. monitoring, logging, and visualization

- Specific cloud provider integrations

Those tasks are addressed by other SIG Cluster Lifecycle projects, such as the Cluster API for infrastructure provisioning and management.

Instead, kubeadm covers only the common denominator in every Kubernetes cluster: the control plane.

What’s new in kubeadm v1.15?

High Availability to Beta

We are delighted to announce that automated support for High Availability clusters is graduating to Beta in kubeadm v1.15. Let’s give a great shout out to all the contributors that helped in this effort and to the early adopter users for the great feedback received so far!

But how does automated High Availability work in kubeadm?

The great news is that you can use the familiar kubeadm init or kubeadm join workflow for creating high availability cluster as well, with the only difference that you have to pass the --control-plane flag to kubeadm join when adding more control plane nodes.

A 3-minute screencast of this feature is here:

In a nutshell:

-

Set up a Load Balancer. You need an external load balancer; providing this however, is out of scope of kubeadm.

- The community will provide a set of reference implementations for this task though

- HAproxy, Envoy, or a similar Load Balancer from a cloud provider work well

-

Run kubeadm init on the first control plane node, with small modifications:

- Create a kubeadm Config File

- In the config file, set the

controlPlaneEndpointfield to where your Load Balancer can be reached at. - Run init, with the

--upload-certsflag like this:sudo kubeadm init --config=kubeadm-config.yaml --upload-certs

-

Run kubeadm join --control-plane at any time when you want to expand the set of control plane nodes

- Both control-plane- and normal nodes can be joined in any order, at any time

- The command to run will be given by

kubeadm initabove, and is of the form:

kubeadm join [LB endpoint] \ --token ... \ --discovery-token-ca-cert-hash sha256:... \ --control-plane --certificate-key ...

For those interested in the details, there are many things that make this functionality possible. Most notably:

-

Automated certificate transfer. kubeadm implements an automatic certificate copy feature to automate the distribution of all the certificate authorities/keys that must be shared across all the control-planes nodes in order to get your cluster to work. This feature can be activated by passing

--upload-certstokubeadm init; see configure and deploy an HA control plane for more details. This is an explicit opt-in feature, you can also distribute the certificates manually in your preferred way. \ -

Dynamically-growing etcd cluster. When you're not providing an external etcd cluster, kubeadm automatically adds a new etcd member, running as a static pod. All the etcd members are joined in a “stacked” etcd cluster that grows together with your high availability control-plane \

-

Concurrent joining. Similarly to what already implemented for worker nodes, you join control-plane nodes whenever, in any order, or even in parallel. \

-

Upgradable. The kubeadm upgrade workflow was improved in order to properly handle the HA scenario, and, after starting the upgrade with

kubeadm upgrade applyas usual, users can now complete the upgrade process by usingkubeadm upgrade nodeboth on the remaining control-plane nodes and worker nodes

Finally, it is also worthy to notice that an entirely new test suite has been created specifically for ensuring High Availability in kubeadm will stay stable over time.

Certificate Management

Certificate management has become more simple and robust in kubeadm v1.15.

If you perform Kubernetes version upgrades regularly, kubeadm will now take care of keeping your cluster up to date and reasonably secure by automatically rotating all your certificates at kubeadm upgrade time.

If instead, you prefer to renew your certificates manually, you can opt out from the automatic certificate renewal by passing --certificate-renewal=false to kubeadm upgrade commands. Then you can perform manual certificate renewal with the kubeadm alpha certs renew command.

But there is more.

A new command kubeadm alpha certs check-expiration was introduced to allow users to

check certificate expiration. The output is similar to this:

CERTIFICATE EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

admin.conf May 15, 2020 13:03 UTC 364d false

apiserver May 15, 2020 13:00 UTC 364d false

apiserver-etcd-client May 15, 2020 13:00 UTC 364d false

apiserver-kubelet-client May 15, 2020 13:00 UTC 364d false

controller-manager.conf May 15, 2020 13:03 UTC 364d false

etcd-healthcheck-client May 15, 2020 13:00 UTC 364d false

etcd-peer May 15, 2020 13:00 UTC 364d false

etcd-server May 15, 2020 13:00 UTC 364d false

front-proxy-client May 15, 2020 13:00 UTC 364d false

scheduler.conf May 15, 2020 13:03 UTC 364d false

You should expect also more work around certificate management in kubeadm in the next releases, with the introduction of ECDSA keys and with improved support for CA key rotation. Additionally, the commands staged under kubeadm alpha are expected to move top-level soon.

Improved Configuration File Format

You can argue that there are hardly two Kubernetes clusters that are configured equally, and hence there is a need to customize how the cluster is set up depending on the environment. One way of configuring a component is via flags. However, this has some scalability limitations:

- Hard to maintain. When a component’s flag set grows over 30+ flags, configuring it becomes really painful.

- Complex upgrades. When flags are removed, deprecated or changed, you need to upgrade of the binary at the same time as the arguments.

- Key-value limited. There are simply many types of configuration you can’t express with the

--key=valuesyntax. - Imperative. In contrast to Kubernetes API objects themselves that are declaratively specified, flag arguments are imperative by design.

This is a key problem for Kubernetes components in general, as some components have 150+ flags. With kubeadm we’re pioneering the ComponentConfig effort, and providing users with a small set of flags, but most importantly, a declarative and versioned configuration file for advance use-cases. We call this ComponentConfig. It has the following characteristics:

- Upgradable: You can upgrade the binary, and still use the existing, older schema. Automatic migrations.

- Programmable. Configuration expressed in JSON/YAML allows for consistent, and programmable manipulation

- Expressible. Advanced patterns of configuration can be used and applied.

- Declarative. OpenAPI information can easily be exposed / used for doc generation

In kubeadm v1.15, we have improved the structure and are releasing the new v1beta2 format. Important to note that the existing v1beta1 format released in v1.13 will still continue to work for several releases. This means you can upgrade kubeadm to v1.15, and still use your existing v1beta1 configuration files. When you’re ready to take advantage of the improvements made in v1beta2, you can perform an automatic schema migration using the kubeadm config migrate command.

During the course of the year, we’re looking forward to graduate the schema to General Availability v1.` If you’re interested in this effort, you can also join WG Component Standard.

What’s next?

2019 plans

We are focusing our efforts around graduating the configuration file format to GA (kubeadm.k8s.io/v1)`, graduating this super-easy High Availability flow to stable, and providing better tools around rotating certificates needed for running the cluster automatically.

In addition to these three key milestones of our charter, we want to improve the following areas:

- Support joining Windows nodes to a kubeadm cluster (with end-to-end tests)

- Improve the upstream CI signal, mainly for HA and upgrades

- Consolidate how Kubernetes artifacts are built and installed

- Utilize Kustomize to allow for advanced, layered and declarative configuration

We make no guarantees that these deliverables will ship this year though, as this is a community effort. If you want to see these things happen, please join our SIG and start contributing! The ComponentConfig issues in particular need more attention.

kubeadm now has a logo!

Dan Kohn offered CNCF’s help with creating a logo for kubeadm in this cycle. Alex Contini created 19 (!) different logo options for the community to vote on. The public poll was active for around a week, and we got 386 answers. The winning option got 17.4% of the votes. In other words, now we have an official logo!

Contributing

If this all sounds exciting, join us!

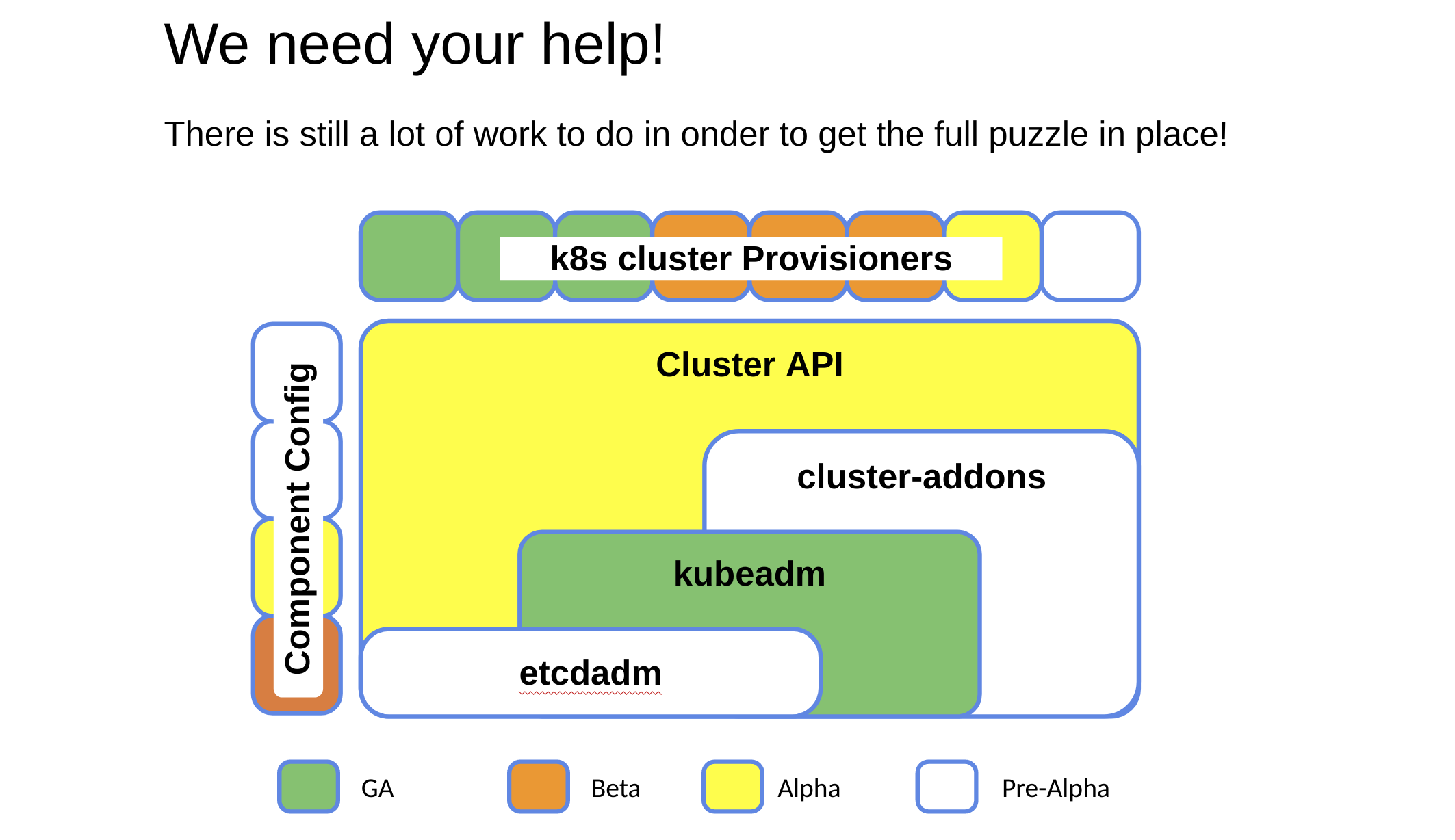

SIG Cluster Lifecycle has many different subprojects, where kubeadm is one of them. In the following picture you can see that there are many pieces in the puzzle, and we have a lot still to do.

Some handy links if you want to start contribute:

- You can watch the SIG Cluster Lifecycle New Contributor Onboarding session on YouTube.

- Look out for “good first issue”, “help wanted” and “sig/cluster-lifecycle” labeled issues in our repositories (e.g. kubernetes/kubeadm)

- Join #sig-cluster-lifecycle, #kubeadm, #cluster-api, #minikube, #kind, etc. in Slack

- Join our public, bi-weekly SIG Cluster Lifecycle Zoom meeting at Tuesdays 9am PT

- Check out the Meeting Notes to join

- Join our public, weekly kubeadm Office Hours Zoom meeting at Wednesdays 9am PT

- Check out the Meeting Notes to join

- Check out the SIG Cluster Lifecycle Intro or the kubeadm Deep Dive sessions from KubeCon Barcelona

Thank You

This release wouldn’t have been possible without the help of the great people that have been contributing to SIG Cluster Lifecycle and kubeadm. We would like to thank all the kubeadm contributors and companies making it possible for their developers to work on Kubernetes!

In particular, we would like to thank the kubeadm subproject owners that made this possible:

- Tim St. Clair , @timothysc, SIG Cluster Lifecycle co-chair, VMware

- Lucas Käldström, @luxas, SIG Cluster Lifecycle co-chair, Weaveworks

- Fabrizio Pandini, @fabriziopandini, Independent

- Lubomir I. Ivanov, @neolit123, VMware

- Rostislav M. Georgiev, @rosti, VMware